As a Dallas emergency physician, Harvey Castro, MD, began experimenting with ChatGPT – an artificial intelligence (AI) chatbot trained on data from the internet to generate human-like responses to text prompts – in November 2022 after asking himself what he’d “want from AI in a hospital setting.”

He then used ChatGPT’s predictive analytics to automate daily tasks, like generating patient appointment reminders, writing discharge summaries, and drafting home care instructions for patients.

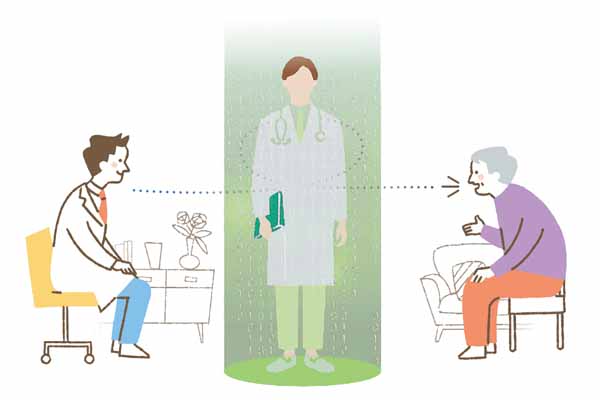

Impressed, Dr. Castro, now a ChatGPT and health care strategic adviser, realized results stemming from physicians using AI beat both the best human and the best AI models alone. This equation, which he shared when he opened the Texas Medical Association’s 2024 joint TexMed and House of Delegates session in Dallas May 3, became “human + AI > best human > best AI.”

“I see these tools … as a supplement,” Dr. Castro said during the event. “Let’s use this for our patients. Take your knowledge and put it in a large language model.”

AI technology has been integrated into multiple electronic health record (EHR) systems, including EPIC, Doximity, eClinicalWorks, and Athenahealth, whose GPT-based platforms can format clinical documentation and common medical correspondence, among other benefits.

In fact, Dr. Castro says many of his colleagues have shared that their AI-integrated EHR systems are now providing “better medical record or discharge summaries” than manual compilation, such as handwritten or typed notes, lab results, and other pertinent information clinicians often spend a significant amount of time organizing and summarizing.

And while still in the early stages of development and use, a 2019 report from the National Academy of Medicine identified three potential benefits of clinical-based AI: enhancing outcomes for both patients and clinical teams, lowering health care costs, and bettering population health (tma.tips/NAMReport2019).

For example, Dr. Castro says AI can help patients manage chronic illnesses, like asthma, diabetes, and high blood pressure, by connecting those patients with relevant screening and therapy options and reminding them to take their medication or schedule follow up appointments.

However, he advises physicians to not become too reliant upon AI, stating that physicians still need to “double check” the technology, especially as patients turn to “Dr. Chat GPT” instead of real-world physicians.

“One of my biggest worries is the danger of physicians becoming dependent on this technology,” Dr. Castro said. “I’m always hesitant to use AI in clinical care until I’ve verified the gadget or model.”

Physician oversight

According to an October 2023 Medscape survey, two out of three physicians are worried about AI piloting diagnosis and treatment decisions. In fact, 88% of respondents believed it was at least somewhat likely that patients who turn to generative AI like ChatGPT for medical data would receive some misinformation.

Dr. Castro says ChatGPT and similar models have “major limitations” physicians should be knowledgeable of, including:

- Narrow understanding of context;

- Limited data privacy;

- Bias-encoded algorithms;

- Unpredictable responses; and

- Hallucinations, or nonsensical outputs.

He admits he worries about physicians being “unaware” of common AI terminology, like neural networks and predictive analytics, and is particularly concerned about physicians understanding one of the most worrisome additions to that list: hallucinations, which occur when AI models generate false, misleading, or illogical information, but present it as if it were a fact.

“Physicians need to make sure that no matter what, they are always verifying all AI technology they use in their practice,” he said.

His warning mirrors TMA’s 2022 policy, which outlines AI should be used as an augmented tool set. Whereas artificial intelligence uses internet data to drive its decisions, augmented intelligence is used as an enhancement aid, and defers to human knowledge to craft its responses.

That approach may help physicians face future liability concerns, Dr. Castro says. Currently, the Food and Drug Administration regulates some AI-powered medical devices, but he says its approach to the rapidly evolving technology is still in the early stages, meaning liability implications for physicians using generative AI in health care are still widely unknown.

However, he predicts that as AI technology becomes more integrated into patient care, determining liability will boil down to whether mistakes were the result of AI decisions, human error, or a combination of both.

“We need to educate our patients on the good, the bad, and the ugly, and we need to ask AI manufacturers the hard questions, because they may not be forthcoming with those answers,” he told Texas Medicine.

Additionally, Dr. Castro recommends physicians “compare apples to apples” when considering AI technology in their practice. For example, using a free edition of a generative or predictive software will not render the same results as a paid subscription version.

“My follow-up question to dissatisfaction around AI is, [whether] a clinician [used] the latest edition or if they defaulted to the free, less adequate option,” he said. “You may find the more expensive version is actually worth the cost.”

From there, Dr. Castro recommends physicians using ChatGPT or similar models to utilize the custom instruction setting within their AI tools. To do this, clinicians should first instruct the AI on its functions by inputting a task list. This can look like:

“You are an AI trained by OpenAI, based on the GPT-4 architecture. You have extension knowledge in various domains until 2021, including health care, ethics, and [physician specialty]. You’re assisting a seasoned physician with a strong background in [physician specialty].”

AI text responses should then:

- Have a tone that is professional yet empathetic, aligning with the communication style of a physician;

- Tailor its information to a health care setting, emphasizing the physician’s specialty and why he or she is choosing to use AI;

- Deliver detailed explanations and, where appropriate, real-world examples to illustrate complex or difficult topics;

- Verify the accuracy and relevance of AI-generated information;

- Confirm responses are grammatically correct; and

- List references to all responses.

Since his original experimentation, Dr. Castro has written multiple books about AI to bridge the gap between technology and medicine and to foster collaboration among physicians.

Additionally, Dr. Castro has aimed to reduce health care burden by developing more than 30 smartphone apps that aid health care professionals, from calculating dosages to helping patients find the emergency room with the shortest wait times.

He sees AI as a new extension of that work.

“For better or for worse, [AI] is coming,” he said. “At the end of the day, we need doctors in the loop.”